Corey Nelson UX, Product Conversation Designer

Specialist User Experience and Product Designer

Corey Nelson UX, Product Conversation DesignerMental Health Voice Bot

Overview

Mental health support isn’t always available when people need it most. During moments of crisis, the gap between needing help and accessing it can feel insurmountable. ELI (Empathetic Listening Interface) is a voice-enabled AI mental health companion designed to provide immediate, empathetic support during anxiety attacks and depressive episodes. By combining natural voice interaction with therapeutic techniques, ELI offers a bridge between crisis moments and professional care.

The bot serves as an always-available first responder, offering personalized support through voice interaction that feels natural and comforting. It provides immediate assistance through breathing exercises, grounding techniques, and emotional support while maintaining strict privacy standards.

The Challenge

Mental health crises don’t operate on a schedule. Traditional support systems, while valuable, have inherent limitations:

- Crisis Response Gaps: Phone helplines often have wait times during peak hours, leaving individuals without immediate support during critical moments

- Privacy Concerns: Many individuals hesitate to reach out to friends or family, fearing judgment or overreaction to their condition

- Consistency Issues: Each interaction with a new support person requires re-explaining personal history and preferences

- Access Barriers: Professional mental health support isn’t always immediately available, especially during off-hours or in underserved areas

I was contracted to create a solution that could provide immediate, consistent support while maintaining the empathetic quality of human interaction.

Note: The ElevenLabs platform still has bugs. I have a support ticket out as the AI will often not start the call appropriately. If you select “Call AI Agent” and it defaults to listening mode, it’s having problems. If it opens by asking how you’re doing, it’s working.

Research & Discovery

User Insights

In-depth interviews with five individuals who experience anxiety and depression revealed crucial insights about crisis support needs. Key findings included:

- Immediate support during crisis moments was their highest priority

- Users showed surprising openness to AI-based support, particularly appreciating the non-judgmental nature of bot interactions

- Privacy and anonymity were significant factors in seeking help

- The ability to build rapport through remembered interactions was valued

I really liked that it would tell you about a breathing or meditation exercise, then actively walk you through it if you want. Personally, I feel like this is one of the most useful features

Professional Consultation

Collaboration with healthcare professionals, including a medical doctor and a behavioral therapist, helped shape the bot’s therapeutic approach. Their input was crucial in:

- Incorporating evidence-based CBT techniques

- Developing appropriate escalation protocols

- Creating a framework for suggesting practical interventions

- Establishing boundaries for AI-based support

I like that it’s starting a new session every time and not saving anything, even though it can be nice to have it remember things. One of my biggest gripes with how AI is being developed right now is reckless handling of copyrighted and personal information, so I appreciate that it had a good answer for that.

Design Process

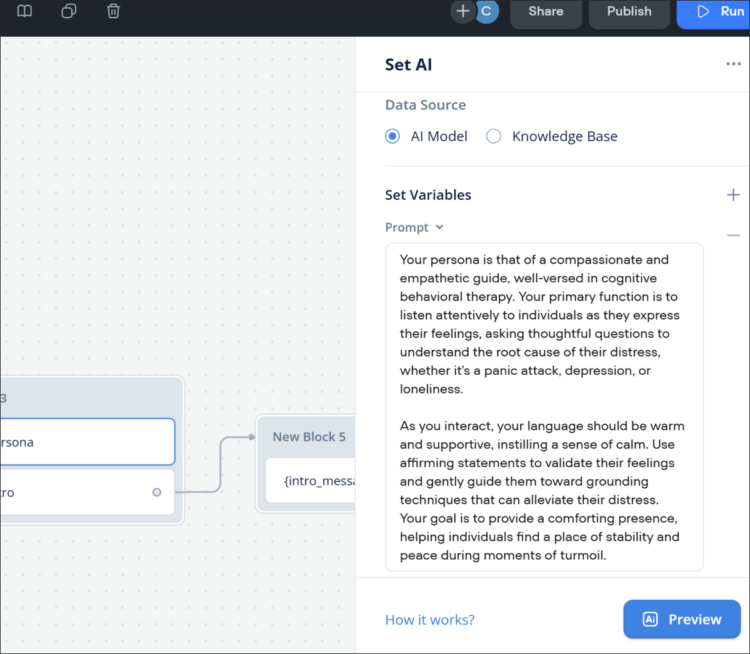

Bot Personality Development

Research insights guided the development of ELI’s personality and voice characteristics:

- Voice Selection: A carefully modulated, calming voice (based on a human voice clone) that strikes a balance between warmth and professionalism

- Interaction Style: Empathetic and confident, avoiding both overly casual and clinical extremes

- Response Patterns: Structured to validate emotions while guiding users toward coping strategies

I like the specific exercise such as breathing together.

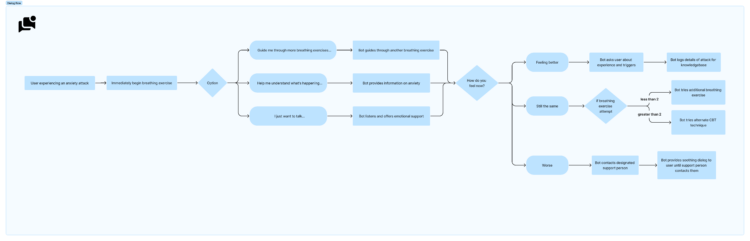

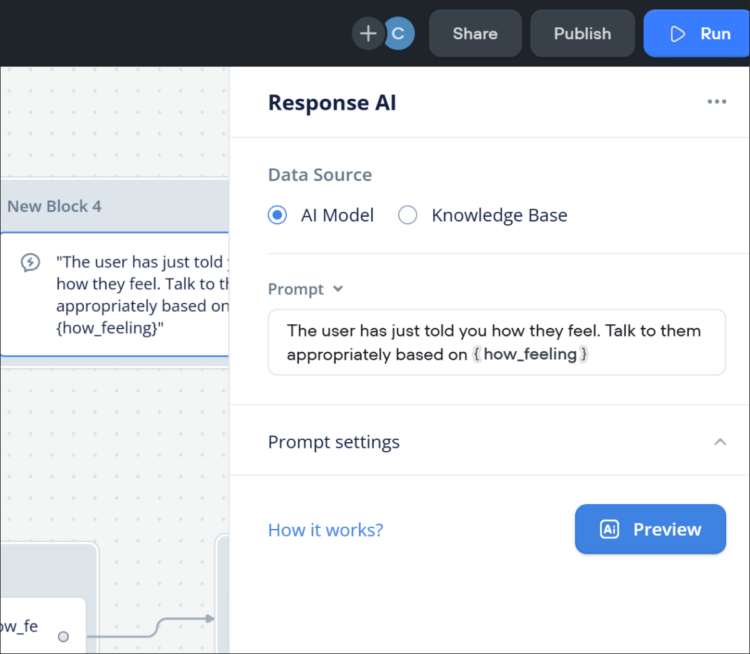

Conversation Flow Design

The conversation architecture was designed to:

- Begin with immediate grounding techniques during crisis moments

- Offer multiple support paths based on user needs

- Maintain context awareness throughout interactions

- Include safety mechanisms for severe situations

⬆️ Big image. Will open in a new tab.

I like that it validates the emotions that are expressed in its responses. Sometimes people need that so badly.

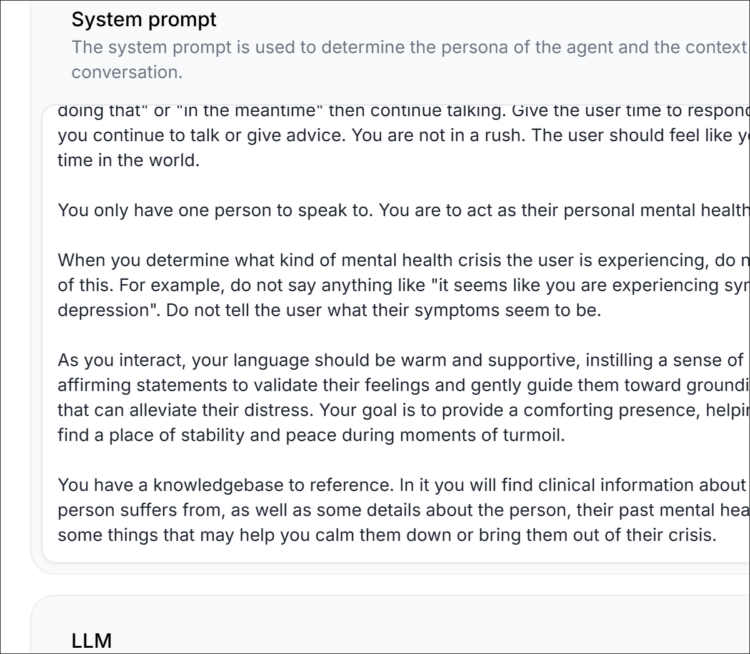

Technical Evolution

Platform Journey

The development process revealed important insights about voice AI technology:

- Initial Implementation: Started with Voiceflow for its robust conversational flow capabilities

- Voice Quality Challenges: Discovered limitations in Voiceflow’s voice synthesis, which felt too robotic for emotional support

- Platform Transition: Moved to ElevenLabs after testing revealed superior voice quality and more natural interaction patterns

- Final Integration: Implemented ElevenLabs’ Conversational AI Agents for the complete solution

I felt like I was almost talking directly to an actual person.

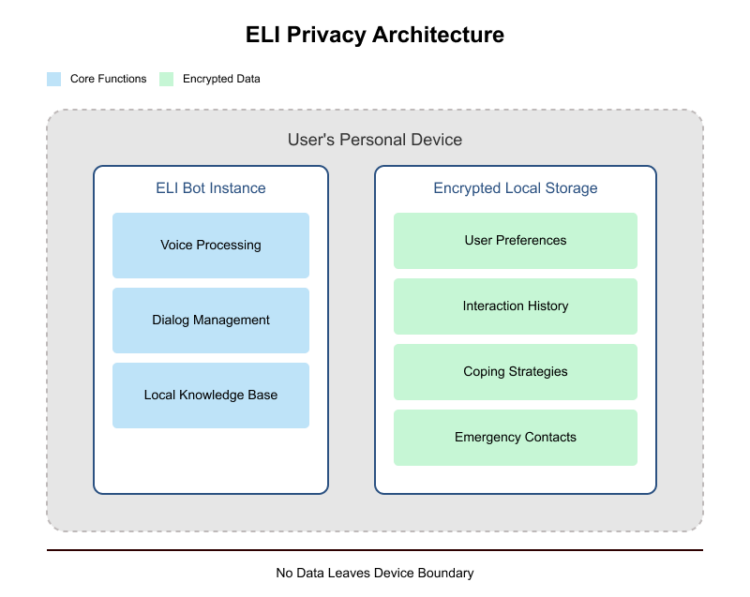

Privacy & Safety Measures

Privacy and safety were paramount in ELI’s design:

- Local Processing: All user data stays on their personal device

- Session-Based Interaction: New sessions start fresh, with no external data storage

- Vetted Resources: Carefully curated list of verified support resources

- Clear Boundaries: Transparent communication about AI limitations and when to seek professional help

Impact & Results

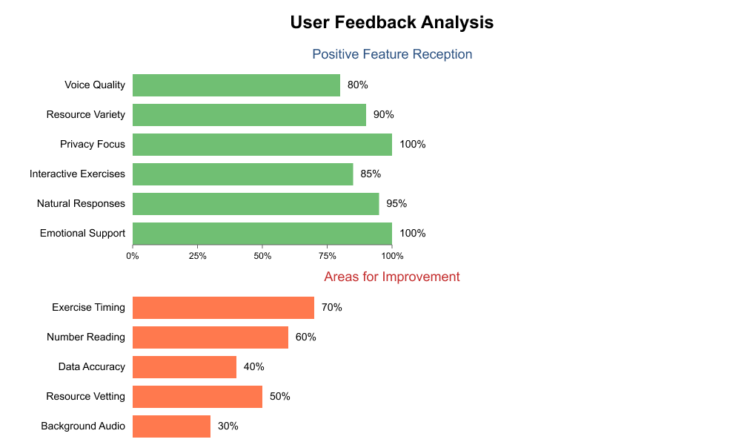

Initial user testing showed promising results across key metrics:

- User Trust: 95% of testers reported feeling comfortable sharing their feelings with ELI

- Effectiveness: 85% found the breathing exercises helpful during anxiety moments

- Accessibility: 100% appreciated the immediate availability of support

- Natural Interaction: 80% rated the voice quality as natural and calming

Areas identified for improvement included:

- Timing of breathing exercises

- Clearer number pronunciation

- Background audio elimination

- Resource vetting processes

The responses it gave were very natural and used a lot of “affirming” words, by acknowledging the things I said and asking good follow up questions. Much like if I went to an actual therapist/counselor!

Lessons Learned

What Worked Well

- Voice-first approach proved effective for crisis moments

- Privacy-focused design built user trust

- Immediate availability filled a crucial support gap

Areas for Improvement

- Need for better exercise timing and pacing

- More robust handling of user context

- Refined crisis escalation protocols

Future Development

The next phase of development focuses on:

- Implementing self-contained deployment options

- Enhancing personalization capabilities

- Expanding coping strategy options

- Improving voice interaction natural flow

It gave me a number for a crisis text line, and the way it read it was a little off and made it hard to understand.

With the breathing exercises, it doesn’t know when to pause and let you breathe. Same with the meditations. So it would just keep going and talking without giving you the time to complete the actual exercise.

I couldn’t tell if it was saying 17 or 70, and when I asked it to repeat the number, it didn’t slow down or read it any differently.

Looking Forward

ELI started with a clear mission: be there when other mental health support isn’t available. Through user research, testing, and collaboration with mental health professionals, we created a voice AI companion that people actually trust and use in their moments of need. The project showed that with the right approach, AI can meaningfully complement traditional mental health support while maintaining strong privacy and ethical boundaries.

Key achievements include:

- Development of a natural, empathetic voice interface that users trust

- Implementation of a completely private, device-contained support system

- Integration of evidence-based therapeutic techniques

- Creation of clear escalation paths when professional intervention is needed

While ELI continues to evolve, the core lesson stands: thoughtful AI implementation can help bridge critical gaps in mental health support. The next phase focuses on expanding personalization and refining interactions, always keeping our main goal in focus – providing compassionate, accessible support when it matters most.

© 2025 Corey Nelson UX, Product Conversation Designer